Technologies Big Data

Master I MIDS & Informatique

Université Paris Cité

2024-02-19

File formats

File formats

You will need to choose the right format for your data

The right format typically depends on the use-case

Why different file formats ?

A huge bottleneck for big data applications is time spent to find data in a particular location and time spent to write it back to another location

Even more complicated with large datasets with evolving schemas, or storage constraints

Several

Hadoopfile formats evolved to ease these issues across a number of use cases

File formats (trade-offs)

Choosing an appropriate file format has the following potential benefits

Faster reads or faster writes

Splittable files

Schema evolution support (schema changes over time)

Advanced compression support

Some file formats are designed for general use

Others for more specific use cases

Some with specific data characteristics in mind

Main file formats

]

]

We shall talk about the core concepts and use-cases for the following popular data formats:

Avro: https://avro.apache.orgParquet: https://orc.apache.org

Avro

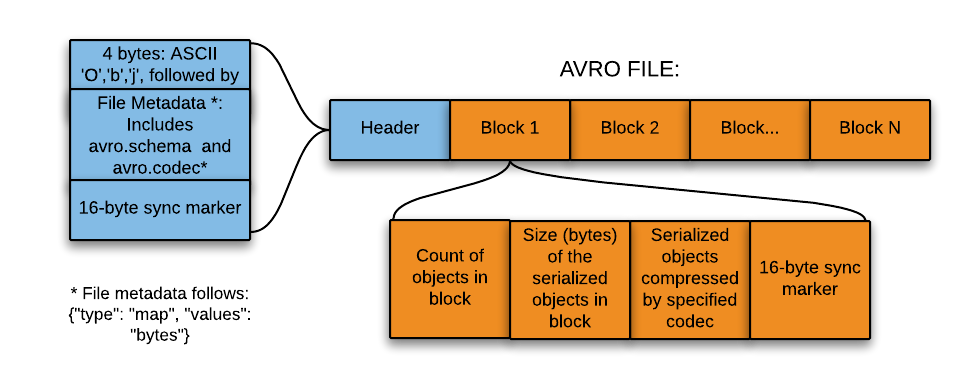

Avro: Principles

Avrois a row-based data format and data serialization system released by theHadoopworking group in 2009Data schema is stored as

JSONin the header. Rest of the data stored in a binary format to make it compact and efficientAvrois language-neutral and can be used by many languages (for nowC,C++,...,Python, andR)One shining point of

Avro: robust support for schema evolution

Avro: rationale

Avroprovides rich data structures: can create a record that contains an array, an enumerated type and a sub-record

Ideal candidate to store data in a data lake since:

Data is usually read as a whole in a data lake for further processing by downstream systems

Downstream systems can retrieve schemas easily from files (no need to store the schemas separately).

Any source schema change is easily handled

Avro: organization

Parquet

Parquet: History and Principles

Parquetis an open-source file format forHadoopcreated byClouderaandTwitterin 2013It stores nested data structures in a flat columnar format.

Compared to traditional row-oriented approaches,

Parquetis more efficient in terms of storage and performanceIt is especially good for queries that need read a small subset of columns from a data file with many columns : only the required columns are read (optimized I/O)

Parquet: Row-wise VS columnar storage format

If you have a dataframe like this

+----+-------+----------+

| ID | Name | Product |

+----+-------+----------+

| 1 | name1 | product1 |

| 2 | name2 | product2 |

| 3 | name3 | product3 |

+----+-------+----------+In row-wise storage format records are contiguous in the file:

While in the columnar storage format, columns are stored together:

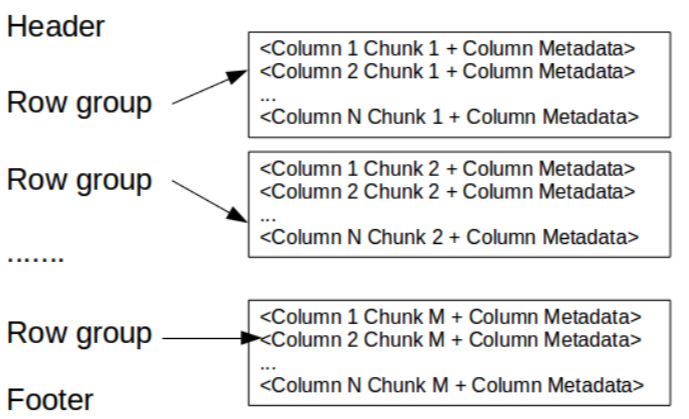

Parquet: organization

This makes columnar storage more efficient when querying a few columns from the table

No need to read whole records, but only the required columns

A unique feature of

Parquetis that even nested fields can be read individually without the need to read all the fieldsParquetuses record shredding and an assembly algorithm to store nested structures in a columnar fashion

Parquet: organization (continued)

Parquet: organization (lexikon)

The main entities in a Parquet file are the following:

- Row group

- a horizontal partitioning of the data into rows. A row group consists of a column chunk for each column in the dataset

- Column chunk

- a chunk of the data for a particular column. These column chunks live in a particular row group and are guaranteed to be contiguous in the file

- Page

- column chunks are divided up into pages written back to back. The pages share a common header and readers can skip the page they are not interested in

About Parquet

Parquet: headers and footers

- The header just contains a magic number “PAR1” (4-byte) that identifies the file as

Parquetformat file

The footer contains:

File metadata: all the locations of all the column metadata start locations. Readers first read the file metadata to find the column chunks they need. Column chunks are then read sequentially. It also includes the format version, the schema, and any extra key-value pairs.

length of file metadata (4-byte)

magic number “PAR1” (4-byte)

ORC

ORC: principles

ORCstands for Optimized Row Columnar file format. Created by Hortonworks in 2013 in order to speed upHiveORCfile format provides a highly efficient way to store dataIt is a raw columnar data format highly optimized for reading, writing, and processing data in

HiveIt stores data in a compact way and enables skipping quickly irrelevant parts

About ORC: organization

ORCstores collections of rows in one file. Within the collection, row data is stored in a columnar formatAn

ORCfile contains groups of row data called stripes, along with auxiliary information in a file footer. At the end of the file a postscript holds compression parameters and the size of the compressed footerThe default stripe size is 250 MB. Large stripe sizes enable large, efficient reads from HDFS

The file footer contains a list of stripes in the file, the number of rows per stripe, and each column’s data type. It also contains column-level aggregates count, min, max, and sum

About ORC (onctinued)

Index data include min and max values for each column and the row’s positions within each column

ORCindexes are used only for the selection of stripes and row groups and not for answering queries

About ORC

ORC file format has many advantages such as:

Hivetype support includingDateTime,decimal, and the complex types (struct,list,mapandunion)Concurrent reads of the same file

Ability to split files without scanning for markers

Estimate an upper bound on heap memory allocation based on the information in the file footer.

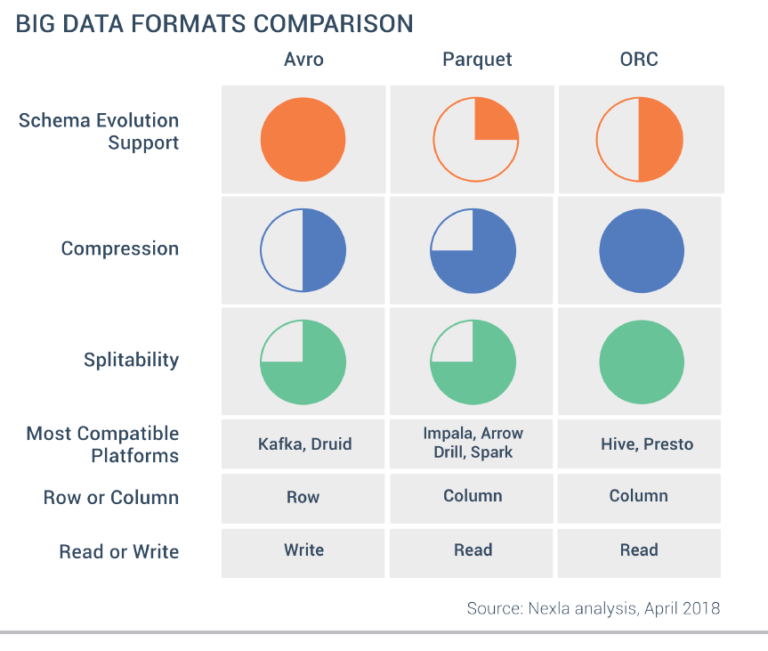

Comparison between formats

Avro versus Parquet

Avrois a row-based storage format whereasParquetis a columnar based storage formatParquetis much better for analytical querying i.e. reads and querying are much more efficient than writing.Write operations in

Avroare better than inParquet.Avrois more mature thanParquetfor schema evolution:Parquetsupports only schema append whileAvrosupports more things, such as adding or modifying columnsParquetis ideal for querying a subset of columns in a multi-column table.Avrois ideal for operations where all the columns are needed (such as in a ETL workflow)

ORC vs Parquet

Parquetis more capable of storing nested dataORCis more capable of predicate pushdown (SQL queries on a data file are better optimized, chunks of data can be skipped directly while reading)ORCis more compression efficient

In summary…

How to choose a file format

R ead / write intensive & query pattern

Row-based file formats are overall better for storing write-intensive data because appending new records is easier

If only a small subset of columns is queried frequently, columnar formats will be better since only those needed columns will be accessed and transmitted (whereas row formats need to pull all the columns)

C ompression

Compression is one of the key aspects to consider since compression helps reduce the resources required to store and transmit data

Columnar formats are better than row-based formats in terms of compression because storing the same type of values together allows more efficient compression

In columnar formats, a different and efficient encoding is utilized for each column

ORChas the best compression rate of all three, thanks to its stripes

S chema Evolution

One challenge in big data is the frequent change of data schema: e.g. adding/dropping columns and changing columns names

If you know that the schema of the data will change several times, the best choice is

AvroAvrodata schema is in JSON andAvrois able to keep data compact even when many different schemas exist

N ested Columns

If you have a lot of complex nested columns in your dataset and often only query a subset of columns or subcolumns, Parquet is the best choice

Parquet allows to access and retrieve subcolumns without pulling the rest of the nested column

F ramework support

You have consider the framework you are using when choosing a data format

Data formats perform differently depending on where they are used

ORCworks best withHive(it was designed for it)Sparkprovides great support for processingParquetformats.Avrois often a good choice forKafka(streaming applications)

But… you can use an try all formats with any framework

Thank you !